Welcome to the first update in over a year. Topics covered:

- Why I haven’t been more forthcoming about development status.

- Development hell and an example of what the end of December looked like.

- Specifications for fpv.blue’s 2.4 GHz version.

- Timeline for the materialisation of the aforementioned product.

As a small introduction for people out of the loop, fpv.blue’s first version was on sale for a very short period at the end of 2017. No sale has been made since then and all of the efforts shifted into designing a second revision working on 2.4 GHz. By all of the effort I’m talking about a single person, the underwritten, working full-time starting from June 2018, which is 6 months and counting.

The Apology

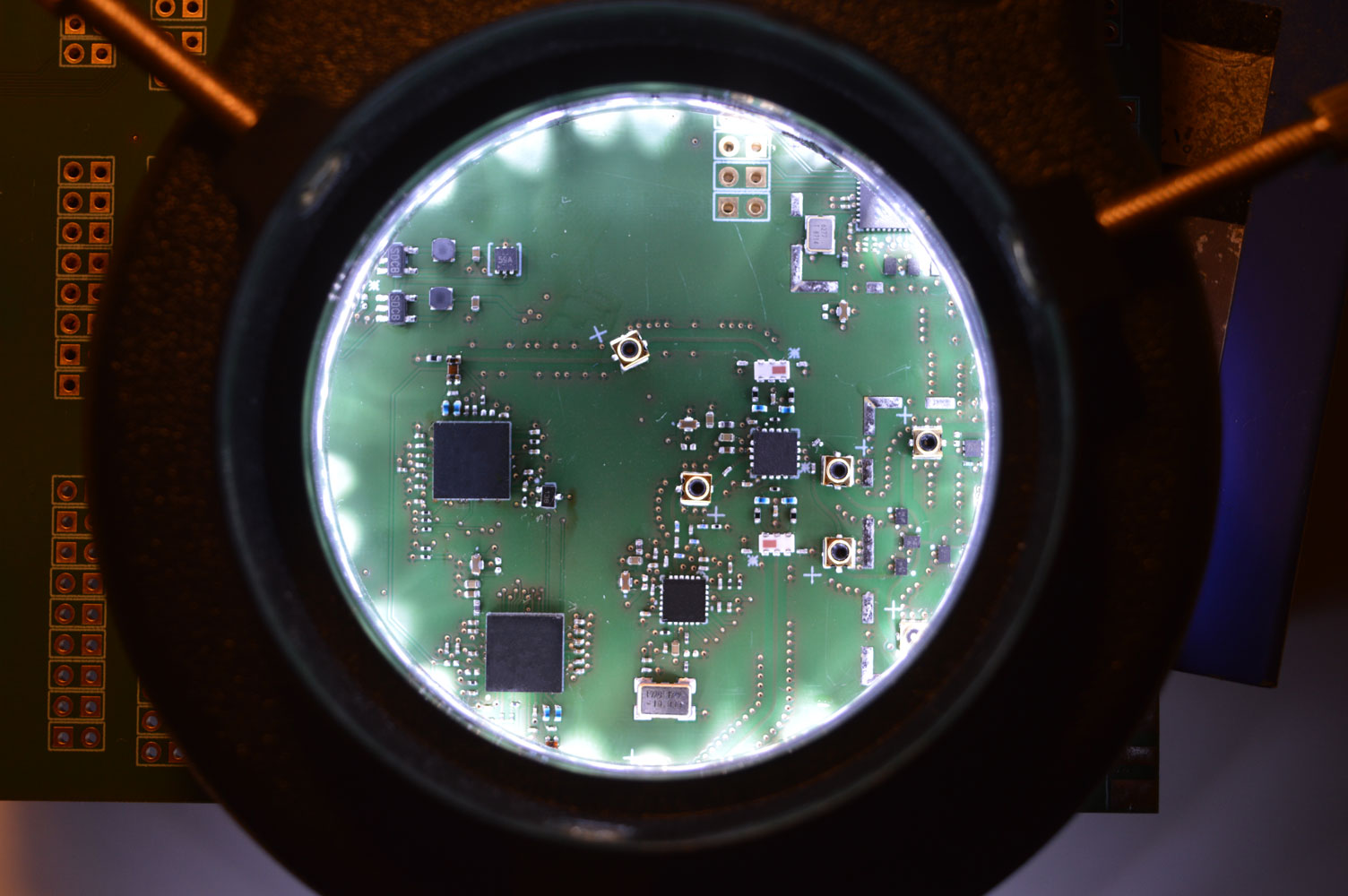

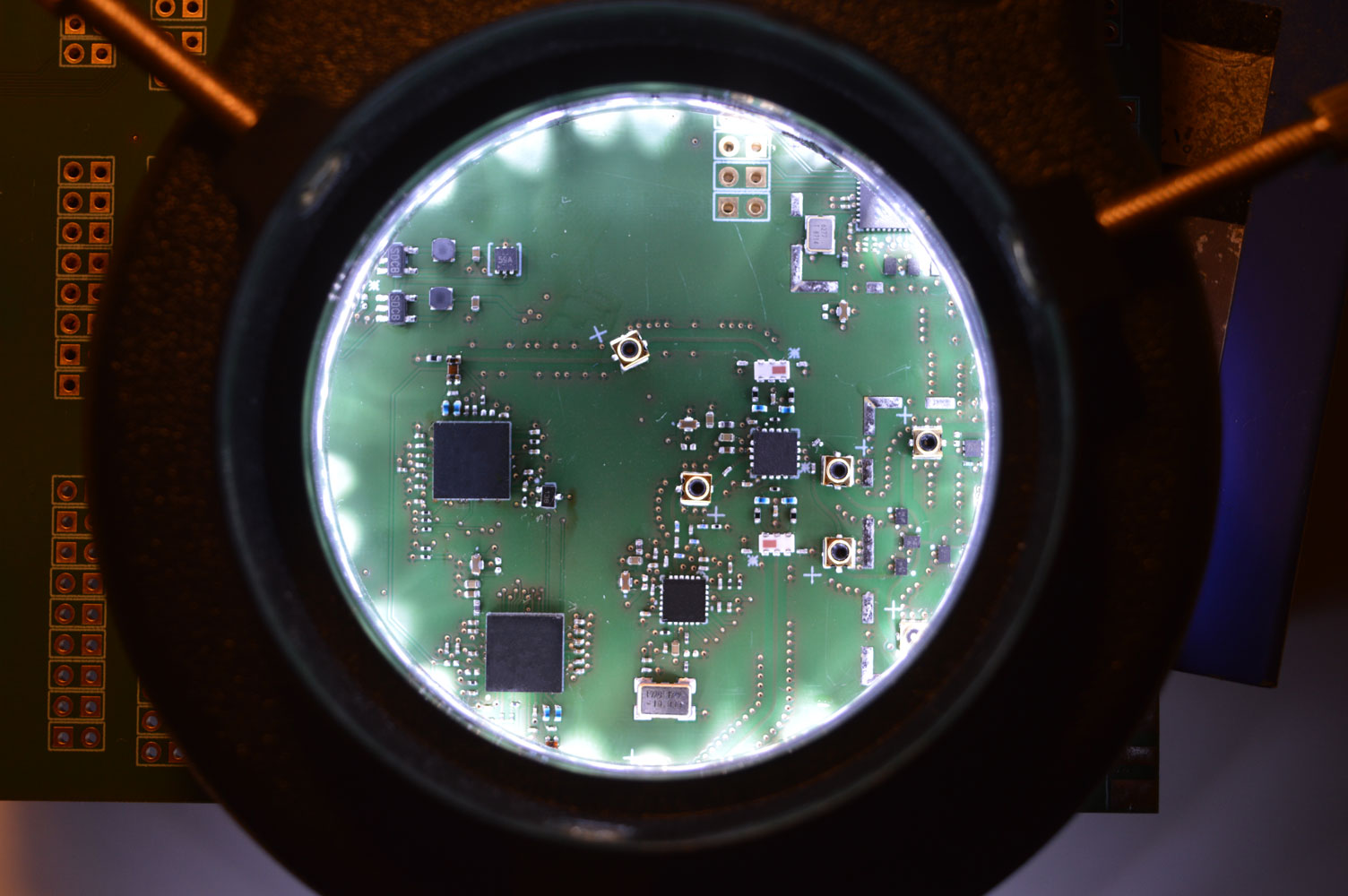

fpv.red – I mean, .blue, was first announced as little more than a proof of concept – one that could fly, but nothing more than a proof of concept nevertheless. I had very little experience with electronics design and manufacturing and was relaying on the help of an external freelancer to help me with it. I had to learn how to solder those tiny resistors the hard way, damaging expensive boards. I barely knew anything about embedded software development and there too, employed external help for part of the codebase as I couldn’t fathom having enough time for it all (the initial code for the STM32F746 on fpv.blue’s first batch wasn’t written by me, even though I spent a lot of time on it before shipment).

All of this brought me to shipping a first batch in late 2017, in an economically unsustainable manner and with a technical result I’m not proud of. Learning from the wise people I had employed, I then started doing everything myself. I’m a perfectionist, so I had to let go from time to time, but I’m glad I went this route as I now have a 360 degrees view on the product and can in some of the cases work much more efficiently then when I had to coordinate with other people not physically around me. The end result of all of this integration, in my humble opinion, is phenomenal.

Anyway. Back to us. The way I had conducted .blue had to stop and did so just after shipping the first batch. I patiently collected my ideas for a second revision and waited until the end of my Computer Engineering degree at Trinity College Dublin in June of this year before jumping into developing FPV hardware full time again.

This time, I promised to myself, I wouldn’t have engaged prospective customers before I myself had the ability to commit to performances and timeline. I then went silent and had to watch people wondering if the project was dead, answering to them in my inbox, but seldomly doing so publicly.

A story of the last couple of weeks of December

As December arrived hardware development was pretty much completed and focus shifted on to software. I had a flight booked for the 24th of December, when I was planning on leaving for my parent’s home where I could test the results of my efforts in the real world. A few days before that, when it became clear I couldn’t make it, I moved my flight to the last day of December. The 24th of December would have ended up being the first day a video frame was making its way through the system, and I was going to spend Christmas Day and New Year’s Eve working. After that, a few hours worth of scheduled work turned into a week of kernel hacking (underperforming DMA hiccups and insane workarounds), the pattern repeated itself, and I finally moved my flight to the 4th of January.

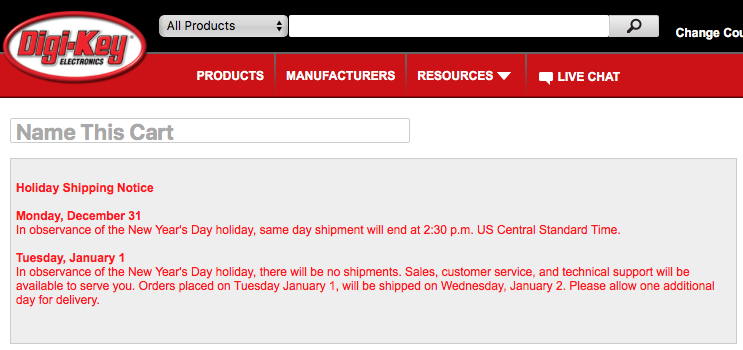

Yesterday night, a bad move on a very messy workbench fried a voltage rail on the receiver and with it all of the associated chips. Magic smoke, old friend, we meet again. With DigiKey being closed for holidays on the first day of the year there was simply no way I could have gotten a replacement here before leaving.

I gave up.

I plan on taking advantage of my flight tickets to go see my family, and I will be back in the office on the 11th. I booked another flight this morning, from the 26th of January to the 1st of February and I cannot foresee any set of events where I don’t have data on the performance of the system by the time I come back.

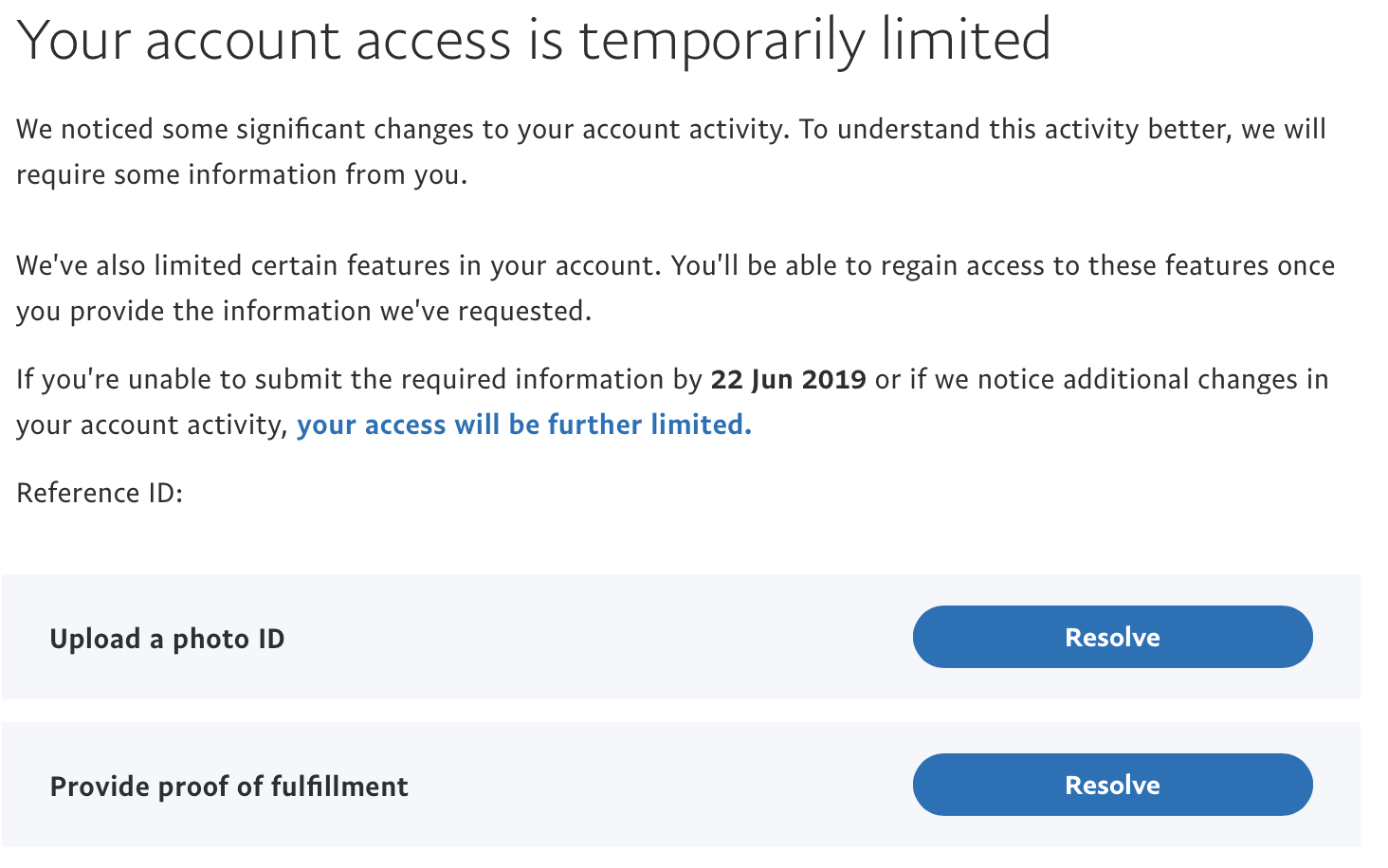

.blue’s 2.4 GHz specifications

This is what the system is currently designed to do and to the best of my knowledge, it is true and accurate. It won’t be perfectly matching the product that is going to be actually released, but as of now, I have no reason to doubt any of this.

- 2.4 GHz: Worldwide, legal operation.

- Video, RC control and telemetry over the same radio frequency link. You will be able to stick the receiver at the back of your RC Radio and have it transmit the RC signal to the video transmitter.

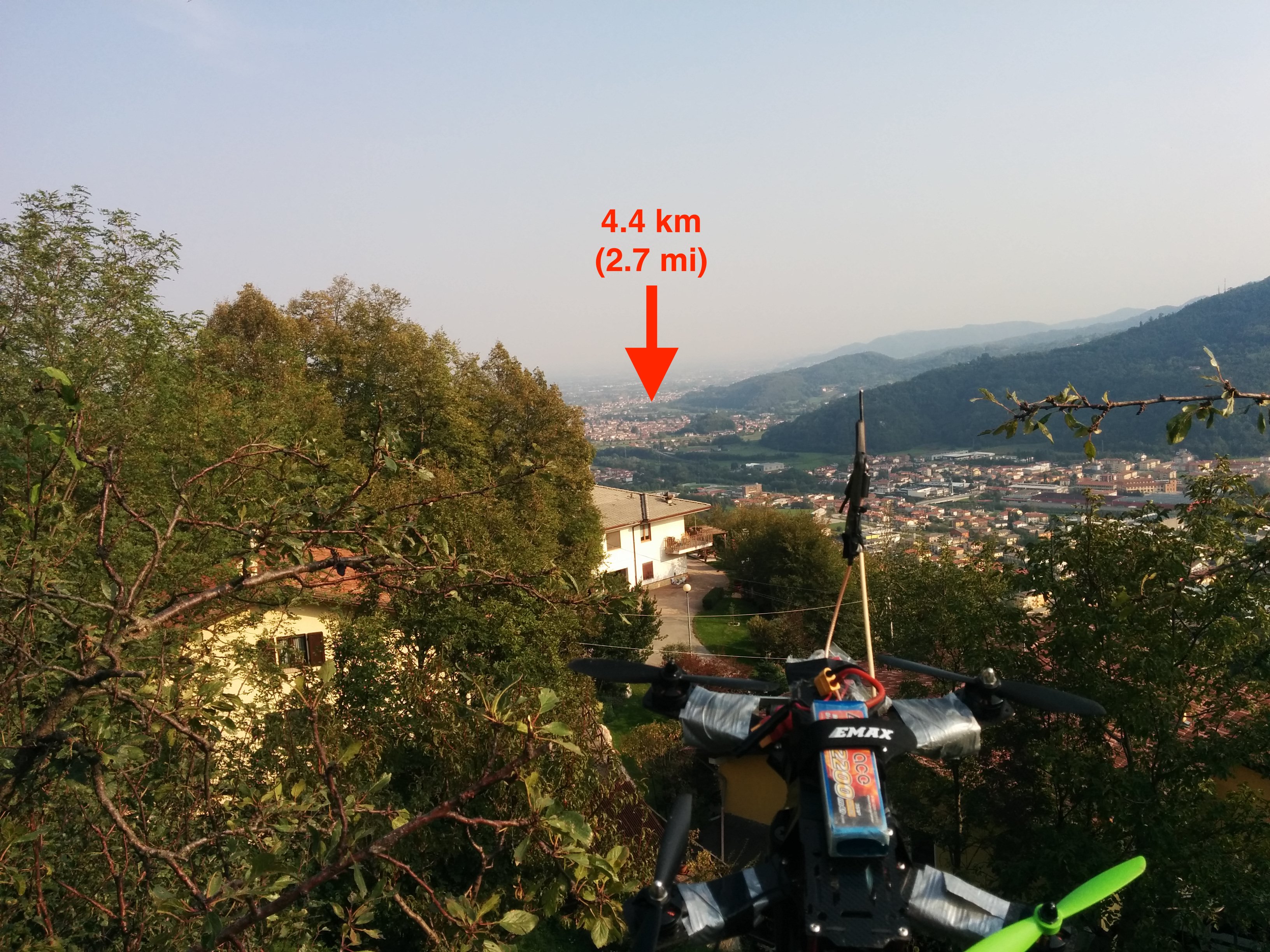

- State of the art sensitivity, up to -105 dBm for video (-110 dBm for control) in ultra long range mode. In full data bandwidth mode, video sensitivity is -95 dBm or better. The system dynamically adjusts the link budget according to interference to provide the best tradeoff of quality and range.

- 1080p @ 30 fps. This mode will be available both via the HDMI input and via a custom camera. The standard camera will be 720p.

- Two video inputs, switchable in real time and connected with an extremely flexible, user replaceable cable of arbitrary length. The inputs can be two HDMIs, one HDMI and one camera, one FLIR and one custom camera, etc.

- Real receiver diversity employing MRC (Maximal Ratio Combining) and advanced interference rejection.

- Fast interference recovery, as low as 8 ms for a short interference burst.

- Digital audio microphone on the transmitter going to the HDMI output at the receiver.

- Glass to glass latency still at 50 ms or better when without interference, 40 ms or better with blueSync enabled.

- Currently being debated: Analog video output at the receiver.

Target pricing will be of USD/EUR 180 for the transmitter, 260 for the receiver and from 30 to 90 for each video input.

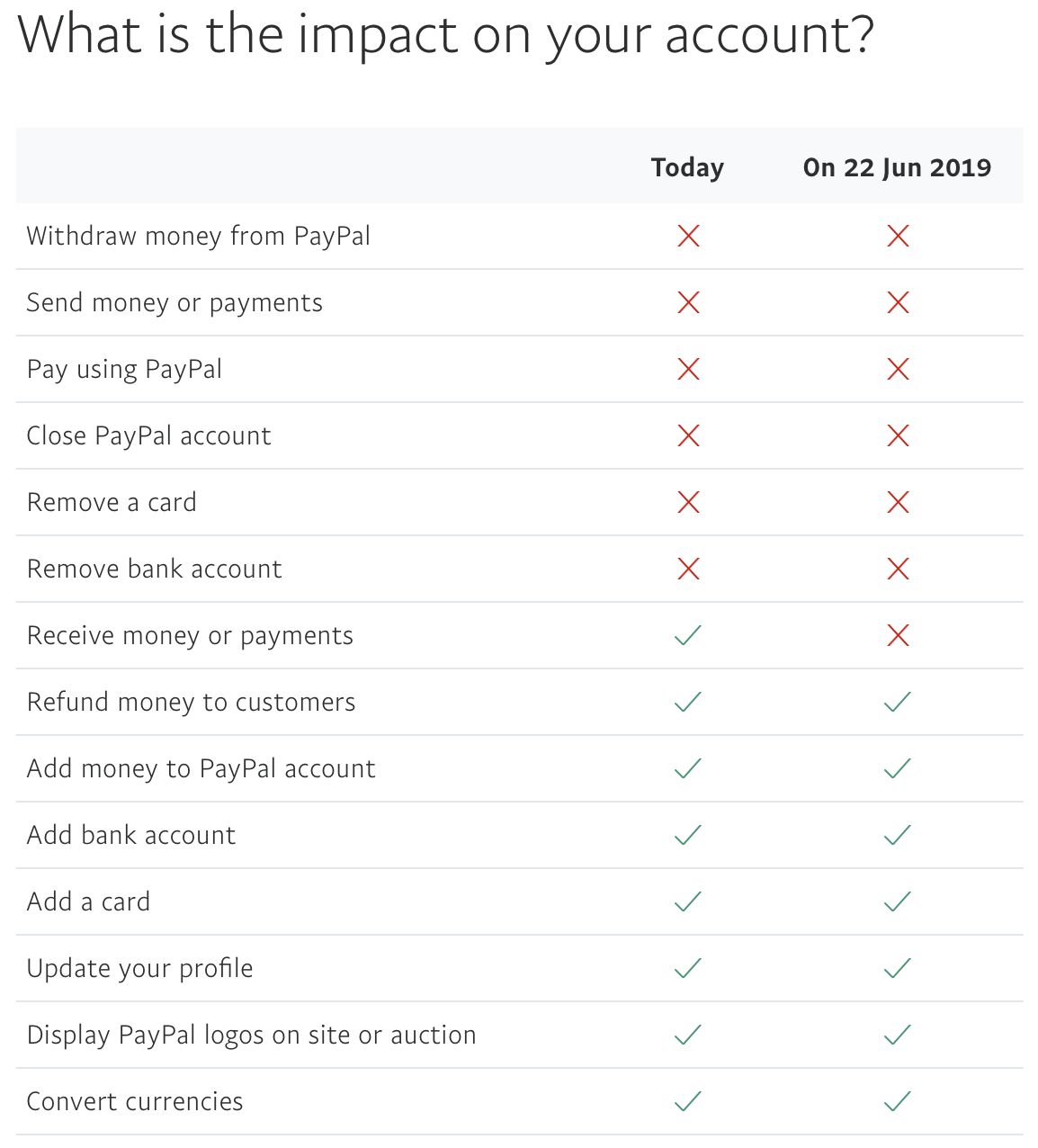

The next timeline

When I talk about the timeline for the “materialisation of the aforementioned product” I’m trying my best to subtly joke about a topic I find frightening. The last thing I want is to mislead people with wrong timing expectations. The follow is optimistic, but I believe I can do it.

- January 11th – 25th: more software development, reaching a mature level as far as the radio frequency subsystem performances are concerned.

- January 26th-February 1st: real world testing and results.

- Beginning of February: test results publication and opening pre-orders.

- February (2 weeks): receiver’s PCB size shrink and integration, sent for manufacturing.

- February (1 week): video input subsystem/cable prototyping, required for the following transmitter PCB shrink

- February (1 week) and March (1 week): transmitter HDI PCB shrink, prototype sent for manufacturing.

- March (1 week): final receiver bringup. If everything green lights, begin bigger scale manufacturing of the receiver for the pre-orders.

- March (1 week) final transmitter bringup. Just as above, if nothing goes poorly the final HDI PCBs can be sent for manufacturing.

Allow one month for manufacturing and more time for timeline slips and you get to the end of April 2019 for the first shipped preorders.

Thanks for reading.

Thanks for reading.

February Update:

Unfortunately due to personal reasons I couldn’t work as much as I thought I could and the timeline above is now postponed by four weeks. I hope to have real world test results by the end of February.

February 28th Update:

As usual, I’m late. The system is finished, just putting some finishing touches on it this weekend then booking a last minute flight next week to go somewhere it can be tested long range. Between that and the time it takes to edit/upload the video the 15th of March sounds reasonable? That’s the internal deadline I will try to make.

March 7th Update:

Flight booked for the 13th of March. The machine learning algorithm deciding ticket prices made me an offer I couldn’t refuse.