A couple of months ago, when we first announced the product specifications, we had a video latency figure of around 80 milliseconds. That number had a note attached to it: a promise to substantially lower it, to 50 milliseconds or less.

This kept us busy for much more than anticipated and required a hardware revision, but it finally happened. This post talks about how FPV latency is usually measured, what FPV latency actually is, how we are measuring this number (how would you measure sub-frame latencies?) and ends with some test results.

The wrong way of measuring FPV latency

The “industry standard” in measuring video latency is nothing short of terrible. It goes something like this: take a portable device, run some kind of stopwatch application on it, point the FPV camera to its screen, take a picture including both FPV screen and stopwatch, subtract the difference.

As you can see, there are several elements here that can influence (timing) resolution: the freeware stopwatch not being written for the application (simply simulating a live, running stopwatch, and not actually implementing one with live output), the digital camera frame exposure time if the two stopwatches are not horizontally aligned (rolling shutter), the portable device display refresh rate, the FPV video system framerate, etc. All together those errors can easily exceed the actual latency of an analog FPV system, where glass to glass latency is usually around 20 milliseconds, a number that is not possible to measure when using the stopwatch method.

A definition of latency

Before measuring it, we better define it. Sure, latency is the time it takes for something to propagate in a system, and glass to glass latency is the time it takes for something to go from the glass of a camera to the glass of a display. However, what is that something? If something is a random event, is it happening in all of the screen at the same time, or is restricted to a point in space? If it is happening in all of the camera’s lenses at the same time, do we consider latency the time it takes for the event to propagate in all of the receiving screen, or just a portion of it? The difference between the two might seem small, but it is actually huge.

Consider a 30 fps system, where every frame takes 33 milliseconds. Now consider the camera of that system. To put it simply, every single line of the vertical resolution (say, 720p) is read in sequence, one at the time. The first line is read and sent to the video processor. 16 milliseconds later the middle line (line 360) is read and sent to the processor. 33 milliseconds from start the same happens to the last line (line 720). What happens when an event occurs on the camera glasses at line 360 and the camera just finished reading it? That’s easy, it is going to take the camera the time of a full frame (33 milliseconds) to even notice something changed. That information will have to be sent to the processor and down the line up to the display, but even by supposing all that to be latency-free (it is not), it takes the time of a full frame, 33 milliseconds, to propagate a random event from a portion of the screen in a worst case scenario.

That is what happens to analog systems, where the interlaced 60 frames per second are converted to 30 progressive and are affected by this latency. There is no such a thing as zero latency. It’s just a marketing gimmick, sustained by the difficulty of actually measuring those numbers.

Measuring latency with sub-frame resolution

Or, measuring the propagation time of a random event from and to a small portion of the camera/display, because the other way is wrong (it only partially takes framerate into account).

A simple way of doing it is to use an Arduino, light up a LED and measure the time it takes for some kind of sensor to detect a difference in the display. The sensor needs to be fast, and the most oblivious choice for the job (a photoresistor) is too slow, with some manufacturers quoting as much as 100 milliseconds to detect a change in light. For this we need to use something more sophisticated, a photodiode, maybe with included amplifier. A Texas Instruments OPT101P was selected for the job. The diode is pretty fast in detecting light changes, try putting it below a LED table lamp and you will be able to see the LED switching on and off – something usually measured in microseconds. However measuring the time between two slightly different lights in a screen is going to take some tweaking and you might be forced to increase the feedback loop of the integrated op amp of something like 10M Ohms.

However, the end result is worth it: You will have a system capable of measuring FPV latency with milliseconds precision.

fpv.blue’s latency

Now that we are done with the introductions let’s look at the actual numbers.

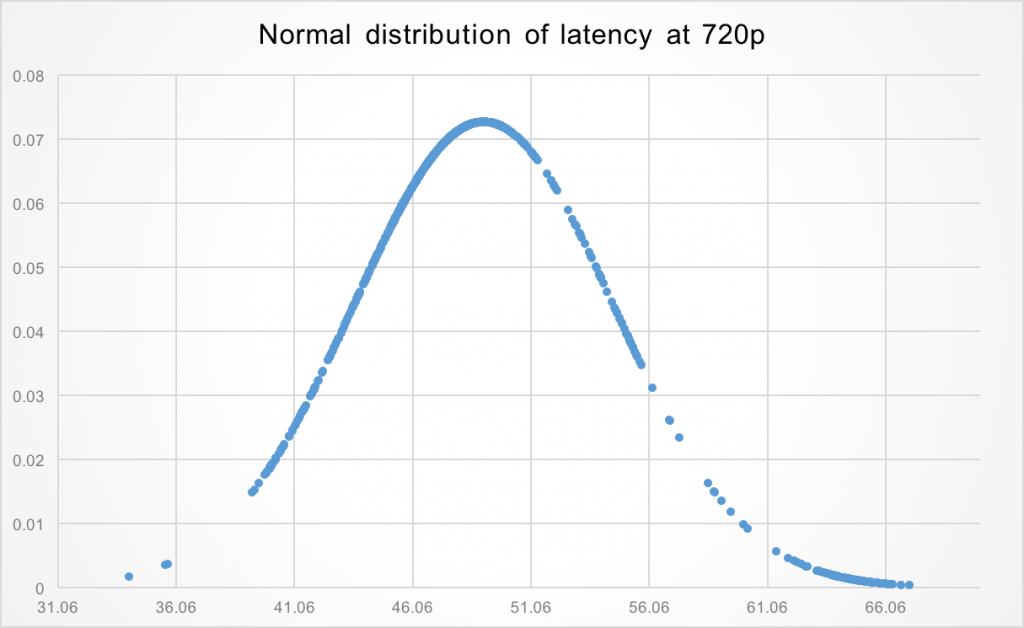

Over 1000 consecutive measurements, that you can download as a data dump from here, the average glass to glass latency for a random event in a restricted area of the screen is 49 milliseconds.

Minimum latency is 34 ms, maximum latency is 67 ms. Those numbers are compatible with what explained above: they depend on the current camera read position and display output position: for a a 60 fps system with 16 ms frame times this is latency average ± 16, or 33 and 65 ms.

So, there you have it, latency is now under 50 milliseconds, as promised.

Can it be lower?

Uhm. Not really. Maybe it could be possible to cut a total of 2-5 milliseconds with months of work, but such an optimisation is not planned at this stage.

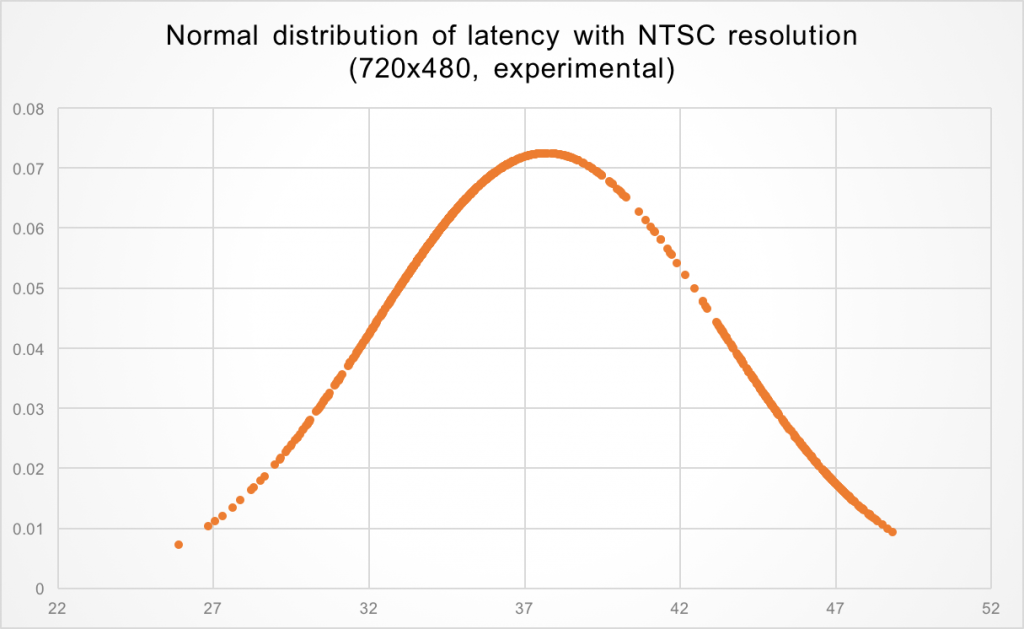

However, that is for 720p. If you are ok with compromising on video quality and using speciality cameras that can output very high frame rates, then yes, it is possible to go even lower. In the following example the average latency is 38 milliseconds.

This was achieved with a frame rate of around 80 fps at both camera and display. Some cameras can go even faster and output VGA (not NTSC) at 120 fps, but this wasn’t tested.

However, for sub 30 milliseconds, that is probably the way to go.

I, and many other FPV pilots, prefer the 50-60fps range of analog systems, especially for racing. I hope your system will include the ability to switch between 720p30 and 480p60/576p50, or just 576p60 if possible. Connex prosight has already captured the 720p30 market, but digital FPV at higher framerates (and eventually, resolutions) is still a sorely empty field.

The 60 frames interlaced of analog systems are usually converted to 30 progressive, so what our system is offering, 60 fps progressive, is already better than the 30 progressive of analog systems. Different resolutions and frame rates are being worked on 🙂

50ms latency ¿con que resolución?

¿Que tasa de datos?

The recording of the stop watch certainly is more accurate than ~20ms. its mostly in the 2-4ms range unless you’re purposely trying to fuck up the test with terrible equipment.

basically while your method is slightly more accurate, it still looks like a marketing plot.

Besides, you’re writing that you have 67ms max latency and declare “sub 50ms” latency because the average is 49ms. What people really care about though is either the max latency (67ms) or the mean latency during a fast paced/rapidly changing image (which is probably also close to 67ms)

Now then again I do hope you succeed at same-or-lower perceived user latency than your competitors, in a lower-priced package (specially since the new connex stuff is out and looks pretty good). So, good luck!

From our tests latency is not affected by how fast the picture is moving, but by the position of input/output devices instead. You can read about it in the post. You can also take a look at the full data dump, where the 80th percentile (not average) latency is 49 milliseconds, nowhere close 67.

I’m sorry you feel that way. This is no where close to a marketing plot. I suggest you to read the post again if you have time and point out any imprecisions if you wish to, a lot of time went into it.

Seriously ??

You see the justified scientific way, presented with raw data, distribution curves and even a detailed explanation, and you call it a marketing plot, just because you can’t understand it ?? So what are your claims; a stop watch recording, recorded by a second camera for comparison is accurate to around 2-4ms ? Sure, if we’re talking 500-1000fps cameras and a stopwatch with ms resolution and sufficient display update rate (indeed, where did you find this one ?). Otherwise, you would need to do some really advanced post-processing to get said results.

When is a product due for release and what is the anticipated pricing.

Would you mind giving comment or feedback on the method of measuring latency that I demonstrate here:

https://www.youtube.com/watch?v=SfbPaRTiB1g

Thanks in advance.

Hey, I saw the video before. You are doing a good job, and your results are pretty good for the method used. However you still have pretty big instrumental errors, and there is little you can do to overcome them. One of the biggest advantages of using a photodiode is automation. You can simply leave the test running for a few minutes and collect hundreds of samples with no effort (the biggest effort being staying away from your computer so that your body doesn’t influence the results acting as an antenna, at least in this setup). The cost of the photodiode is very small and Arduinos are common, my personal point of view is that you could have set this system up with the time taken to make that video.

Hi ! Did you test the latency at resolution of 1920*1080 or even 4K ? Thanks !

No, you can expect a modest increase at 1080p and our system is not going to support 4K (1080p might be supported in the future).